Web Exclusive

Existing and emerging bioinformatics approaches are creating a brand new breed of toxicogenomics.

Drug safety continues to be an area of growing importance to the pharmaceutical and biotech industry with late-stage attrition driving the need to fail compounds with potential side effects early in the discovery process. Toxicogenomics uses gene expression to assess the effect of compounds on genes, in an effort to identify areas for further investigation and to compare against known toxic gene signatures from internal, external, and third-party sources.

New approaches to data analysis, integration, and visualization can put scientists in control of their data (Figure 1), remove bottlenecks in analysis, and enable semi-automated generation of safety reports for new compounds. These reports can incorporate more traditional safety results and legacy data to give a complete overview. In some cases, the results can also be delivered via interactive Web applications with specific pages for each domain expert in a multi-disciplinary drug discovery team, which enables detailed exploitation of the safety report. By combining data analysis, bioinformatics annotation, pathway analysis, and text mining, scientists can fully explore and understand their results.

Using gene expression for assessing the possible toxicity of new lead compounds is now common practice in many mechanistic and predictive toxicology groups.1-3 The decrease in cost per assay has created a new breed of toxicogenomics experiments that include a greater number of study subjects and/or assayed time points that can be carried out at multiple sites. This increase in data volume is creating an analysis bottleneck around the technical teams. This is further complicated by the need to consistently control the quality of data, analyze results, and annotate gene lists, as well as provide visualization of results across large groups of users. Established approaches to experiments now ensure that results can be compared,4 but analysis and annotation now need to be applied consistently over much larger and disparate data sets.

New trends are seeing best practice toxicogenomic analysis protocols captured as workflows (visual programs), enabling them to drive automated reports and be shared across groups (Figure 2). The use of workflow technologies is common in many areas of drug discovery5

|

and in translational research, 6 which has similar integration, analysis, and visualization needs. Workflow and data pipelining platforms are also used to power interactive Web pages to provide analysis and visualization capabilities to end user scientists. These interactive analytical Web applications are removing the analysis bottlenecks and opening up data for scientists to explore in a guided and consistent way. New developments in interactive Web-based delivery allows millions of data points to be visualized together, enabling the manual removal of outliers in the data—a common request that in the past has been difficult to achieve over the Web and with ever-growing data sets.

High usability plus best practice analysis

Supporting the analysis of large volumes of gene expression, data puts significant strain on bioinformatics and statistical groups. Automated reports and self-service Web portals allow a large user base to be supported with a small team of technical people. Using the Web portals, end users can quickly run analysis workflows based on the best practices, and designed by internal statisticians. For example, typical analysis workflows may run a combination of Tukey tests, one- and two-way analysis of variance (ANOVA), Principle Component Analysis, and in-house developed R and SAS analyses to provide a set of highly differentially expressed genes. This ensures analyses are valid and consistent throughout the company. The end user can be insulated from IT complexity so that, for example, they do not need to understand how to configure analytical tools and can make the best use of these techniques and tools with minimal training. Technical teams are able to easily modify workflows and Web pages, use other analysis algorithms or add new algorithms into the system.

The resulting gene lists can be automatically compared to known gene signatures for toxicity. As toxicogenomics is well established in many companies, it is common to have a set of known gene signatures that are associated with drug toxicity. This internal knowledge can be supplemented from public sources such as the National Center for Biotechnology Information (NCBI) Gene Expression Omnibus and HuGEIndex.org, which provide gene expression data on various known drugs and side effects. Major research programs such as the European Union InnoMed, PredTox project7 are also building up knowledge about toxicity profiles.

Providing biological context

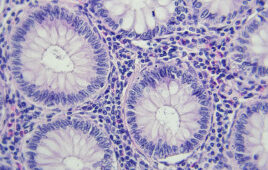

Annotation from typical bioinformatics resources can be used to help understand more about specific genes. Workflows can be configured to automatically retrieve data from NCBI, European Bioinformatics Institute (EBI), and other sources, bringing valuable information from databases such as EntrezGene, UNIPROT, and dbSNP8. Annotation of gene families, function, and polymorphisms provides valuable insight into possible side effects, particularly around key organs, for example p450 and associated drug metabolism in the liver. Workflows can also retrieve data from the Gene Ontology Consortium on cellular component, biological process, and molecular function; and from Online Mendelian Inheritance in Man (OMIM) on gene associations with disease. These annotation workflows are also easy to maintain and modify; this is an important consideration because formats in these sources can change quite frequently.

The use of commercial metabolic pathway tools from GeneGo Inc. (St. Joseph, Mich.), Ingenuity Systems, (Redwood City, Calif.), and Ariadne Genomics (Rockville, Md.), is common place in gene expression and consequently are important tools for toxicology.9 Pathway tools provide programmatic interfaces that can be used by workflow platforms to input a gene list, configure the tool, and retrieve the results. This allows the user to simply click a button and automatically display graphical pathway views and understand the scientific context without needing to import and export data.

|

Further validation using text mining

Genes of interest can also be automatically validated using workflow-based text mining capabilities that query PubMed for matches between gene lists, side effects of interest, and appropriate linguistic relationships. Text mining tools can be difficult to configure and use—the reason they are not widely used. Text mining workflows can be configured to take a gene list as input, retrieve a list of synonyms from public sources and look for co-occurrence in an abstract with disease terms of interest. Resulting lists of abstracts can then be further filtered by proximity of disease, ontologies, and gene terms to deliver the user with a set of highly-relevant abstracts. This enables users to quickly find relevant documents to validate their findings.

It is also possible to use workflows to configure citation graphs of authors in particular diseases or gene families, enabling scientists to quickly identify key experts and organizations for possible collaborations. Public domain tools such as Cytoscape10 can be used to display the citation maps, which can be generated from commercial sources such as Thomson Reuters or public domain sites such as Google Scholar.

Conclusion

The use of workflow technologies and interactive web applications is saving considerable time and empowering scientists to take control of their toxicogenomic and other preclinical study data. By providing an integrated environment for data analysis, annotation and validation, scientists can gain a much greater understanding of their results and potential side effects while benefiting from best practice analysis procedures. As the use of other ‘omics technologies in toxicology grows, e.g. proteomics and metabolomics, workflow systems can integrate and analyze these data alongside existing data to provide a future proof environment.

References

1. Daston, J.P. Gene Expression, Dose-Response, and Phenotypic Anchoring: Applications for Toxicogenomics in Risk Assessment. Toxicological Sciences. 2008;105(2):233–234.

2. Zidek, N. et al. Acute Hepatotoxicity: A Predictive Model Based on Focused Illumina Microarrays. Toxicological Sciences. 2007; 99(1):289–302.

3. Guerreio, N. et al. Toxicogenomics in Drug Development. Toxicologic Pathology. 2003;31:471–479.

4. Quackenbush, J. Microarray data normalization and transformation. Nature Genetics

5. Shon, J. et al. Scientific workflows as productivity tools for drug discovery. Current Opinion in Drug Discovery & Development. 2008;11(3):381-388.

6. Beaulah, S.A. et al Addressing informatics challenges in Translational Research with workflow technology, Drug Discov Today. 2008;13(17-18):771-7.

7. König, J. Systems Toxicology, a merger between toxicology and “omics”, to predict toxicity earlier in drug development. Drug Discovery & Development. 2008;11(8):28-30.

8. https://taverna.sourceforge.net.

9. Xu, E.Y. et al. Integrated Pathway Analysis of Rat Urine Metabolic Profiles and Kidney Transcriptomic Profiles To Elucidate the Systems Toxicology of Model

Nephrotoxicants. Chem. Res. Toxicol. 2008;21(8):1548–1561

10. https://www.cytoscape.org/

About the Author

Jonathan Sheldon is the Chief Scientific Officer at InforSense and is responsible for directing R&D. Previously he was CTO for Confirmant and Head of Bioinformatics at Roche Welwyn, UK. Dr. Sheldon holds a PhD in Molecular Biology/Biochemistry from the University of Cambridge.

Filed Under: Genomics/Proteomics