[Image Teerayut/Adobe Stock]

This article explores strategies to harness AI for data management in clinical trials while avoiding potential pitfalls such as data integrity issues and large language model hallucinations, which can lead to unreliable or distorted outputs.

1. Understand the complexity of clinical trial data

The complexity of clinical trial data can be difficult for someone outside the field to appreciate, according to Jeff Elton, CEO of ConcertAI. “There can be 60 to 70 different levels of inclusion and exclusion criteria,” Elton explained. Examples can include lab values, past drug exposure, drug responses and even speculative questions like, ‘Is this patient expected to live another six months?’ “It’s a tedious process, requiring researchers to navigate through dozens of screens, documents and applications for each patient,” Elton said. “It can take hours, given the meticulous nature of the work.”

The inherent complexity and variety in clinical trial data pose further challenges. In oncology, for instance, modern clinical trials often have a multifaceted design, with complex tissue sampling and molecular and processing requirements. In drug discovery, data hurdles are also common, as UBS recently noted in its assessment of the potential of generative AI in that domain.

When wrestling with such complexity, healthcare organizations must maintain organized and standardized data recording processes. Healthcare organizations must ensure they have experienced personnel in place who can maintain organized and standardized data recording processes. Skilled use of AI and machine learning tools can streamline data handling tasks.

2. Embrace an iterative approach to adopting AI for data management in clinical trials

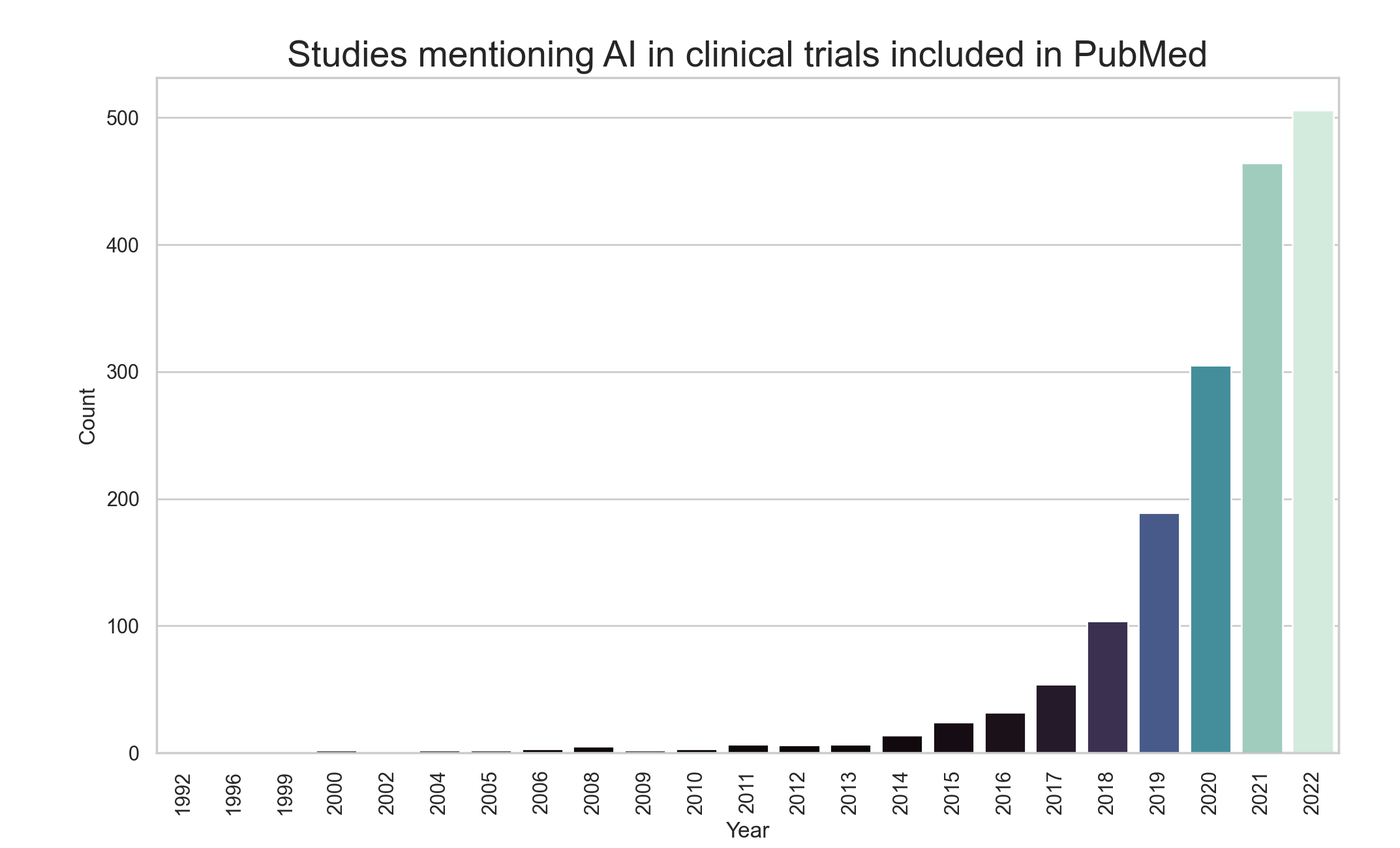

Bar chart showing the number of studies mentioning AI in clinical trials included in PubMed from the years 1992 to 2022. [Data from PubMed]

At ASCO, ConcertAI highlighted its DTS eScreening software, a machine learning–based tool designed to rank patients based on their predicted clinical trial eligibility. The tool assesses eligibility criteria based on clinical relevance and predicts the probability of meeting them, even when patient data are missing. The company assessed the tool in a multi-site clinical trial that involved 49 digitized criteria and a cohort of 10,156 patients. DTS eScreening employed a machine learning algorithm, LightGBM, to develop 17 models. These models predict patient eligibility for clinical trials based on various criteria, including the Eastern Cooperative Oncology Group (ECOG) score, a standard measure employed in cancer research. Other metrics used included cancer stage, lab results and vital signs, with an average prediction accuracy rate exceeding 99%.

The use of AI strategies such as the DTS eScreening software promises to lead the way forward, especially as the healthcare establishment grapples with the aftermath of the pandemic and staffing shortages.

As the healthcare establishment grapples with the aftermath of the pandemic, including staffing shortages, AI strategies promise to lead the way forward. Cambridge, Massachusetts–based Concert AI, for instance, has observed how AI-assisted techniques can make “people sometimes 10 times more productive.” To put it in perspective, clinical trial paperwork tasks that would typically consume a whole day could now be accomplished within one to two hours, Elton said.

3. A multi-dimensional approach in AI for data management in clinical trials

Until relatively recently, clinical drug development had been slow to adopt emerging technologies, as a 2019 article in NPJ Digital Medicine noted. But dramatic leaps in efficiency are now possible thanks to tools such as optical character recognition (OCR) and natural language processing (NLP). These technologies can extract text from PDFs and other similar sources and interpret human language respectively. Elton underscores their value, emphasizing how they can quickly process a multitude of documents. For instance, OCR and NLP can systematically extract and organize data such as inclusion and exclusion criteria. This technique dramatically accelerates data processing, freeing researchers to focus on more important tasks.

Concert AI relies on a combination of traditional methods like random forests and large language models with deep domain knowledge. “We start off doing a lot of very traditional approaches, things you see when reading the literature,” Elton explained. But the company believes cross-disciplinary domain expertise is a point of differentiation. He describes forming teams with diverse expertise. A team might include a Ph.D. physicist, a clinical informaticist, a cancer epidemiologist and a Python coder.

These expert teams approach AI model development as they would a scientific experiment, with the goal of achieving human-equivalent accuracy and recall. Elton says the approach contrasts starkly with the traditional AI development process, where 80% of the time is spent on data preparation and only 20% on model development and validation.

4. Narrowly applying AI optimizes performance

Elton elaborated, “Our AI system is still in its early stages and not yet widely exposed to diverse fields. We’re harnessing its algorithmic power in specific, narrow domains, which ensures optimal performance. One of our primary applications is having the AI analyze clinical files and recode diagnostic codes. We then compare these with actual diagnostic codes from medical records, turning our AI into an expert at coding medical information.”

“In just three days, we saw the AI’s coding accuracy reach tremendous heights, which was genuinely remarkable. What’s even more exciting is that we can provide the AI with templates or phenotypes, guiding it towards specific patterns and associations that we want it to find and it can support.”

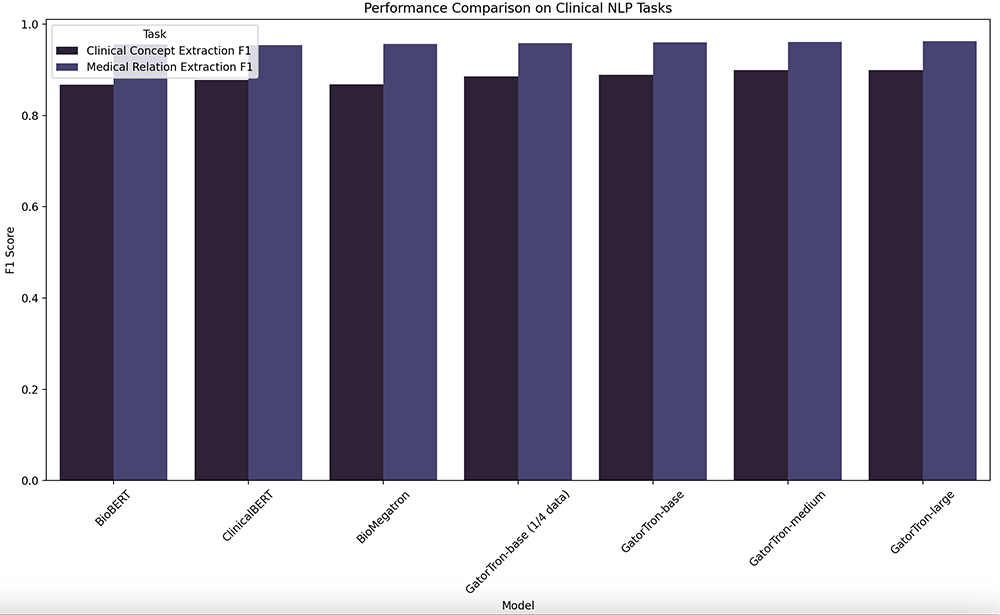

Another example of the power of a narrow AI focus comes courtesy of GatorTron, a sizeable clinical language model designed specifically to process and interpret electronic health records (EHRs). Highlighted in NPJ Digital Medicine, GatorTron boasts 8.9 billion parameters. Conversely, previous models were limited to around 110 million parameters.

F1 Score comparison of models for Clinical Concept Extraction and Medical Relation Extraction tasks. In machine learning, F1 Score is a metric that combines precision and recall to assess a model’s accuracy on a binary classification task. GatorTron variants, especially GatorTron-large with 8.9 billion parameters, show competitive or superior performance. [Data from NPJ Digital Medicine article “A large language model for electronic health records“]

5. Open Source methodology and transparency can fuel healthcare research progress

The use of open-source methodology in scientific research, particularly in AI, has the potential to fast-track innovation. Examples of such transparency come courtesy of the developers of the GatorTron AI model and Google’s DeepMind. By sharing their training codes, they not only bolster the reliability and verifiability of their work but also pave the way for collaborative advances in the field.

Study authors posted code for training GatorTron at https://github.com/NVIDIA/Megatron-LM and https://github.com/NVIDIA/NeMo. They posted the code for preprocessing text data at https://github.com/uf-hobi-informatics-lab/NLPreprocessing and https://github.com/uf-hobi-informatics-lab/GatorTron. They developed GatorTron in partnership with GPU maker Nvidia.

In addition, Google’s DeepMind has open-sourced their work on protein structure prediction with AlphaFold, which was highlighted in Nature. The source code for the AlphaFold model, trained weights, and the inference script is available at https://github.com/deepmind/alphafold.

6. Supervise AI/explainability

The introduction of generative AI models such as ChatGPT 3.5 and ChatGPT 4 into Concert AI’s workflow represents a significant step forward in efficiency and automation.

Elton further mentioned that Concert AI is now focusing on defining use case domains and methodologies to add some level of supervision. “The problem with a lack of supervision is that you could end up with an AI system that is a black box,” Elton said. “If you can’t explain the AI’s decisions, you can’t know whether you can trust it or not. It could generate skewed or even hallucinated associations, which could be detrimental, especially in the healthcare sector.”

To avoid such scenarios and ensure that its AI is reliable, the company focuses on enhancing explainability. “We want to understand the ‘why’ behind the AI’s decisions, especially when those decisions could impact patient care and evidence generation,” Elton said.

7. Ensure accountability when adopting AI for data management in clinical trials

Elton elaborated on his team’s determination to fine-tune the operational domains and procedures of their AI models. This involves incorporating a level of supervision into the AI, an aspect he highlighted as vital when interacting with such technology. The process involves:

- Directing AI towards specific problems.

- Constraining its creative ability in data integration.

- Judiciously managing its access to varied data sets.

He stressed the potential hazards of a lack of supervision, noting, “An unsupervised AI system could resemble a black box, where the logic of its decisions is hidden, making it difficult to judge its trustworthiness. This situation might result in the AI forming distorted or even fabricated associations, which could lead to serious repercussions, particularly in healthcare. To prevent such situations and to guarantee the reliability of our AI, we are focusing our efforts on improving explainability. We strive to understand the ‘why’ behind the AI’s decisions, especially when those decisions could influence patient care and the generation of evidence.”

The U.S. Government Accountability Office has demonstrated a broader context for AI accountability by developing the federal government’s first framework to ensure accountability and responsible use of AI systems, as HBR has noted.

8. Preempt AI misuse scenarios

Elton acknowledged the possibility of AI misuse. In the clinical trial domain, for instance, AI could enable bad actors to re-identify individuals in de-identified data. But he does not believe that a moratorium on AI is an answer. “And our view is that things don’t disappear with moratoriums; they just go underground,” he said. “I would prefer to foster transparency, discussion and direct confrontation.”

As think tanks like the Brookings Institution and others have considered, bad actors could exploit AI to orchestrate wide-scale disinformation campaigns. The misuse of AI could manifest in various forms, from deepfakes to financial manipulation and bias in decision-making systems. Such risks present a significant threat to digital, physical, and political security, thereby emphasizing the need for policymakers, law enforcement and cybersecurity experts to prepare for and mitigate these abuses.

To counter the risks, Elton said it is vital to have participation from the wider community, governmental bodies and organizations like the FDA to inform AI adoption in healthcare. He concluded, “The problem is, how do you regulate something that moves faster than anything we’ve ever seen before.”

Filed Under: Data science, Industry 4.0, machine learning and AI

Tell Us What You Think!

You must be logged in to post a comment.