Is “virtual man” science fiction or a scientific necessity for drug development? Analysts share their vision of future technologies and practices.

In 2020, when John Doe’s doctor asks if he would be willing to participate in a study for a new treatment for AD3, the subtype of Alzheimer’s disease with which he was recently diagnosed, he jumps at the chance. His doctor forwards John’s medical records to a national clinical trial super center, one of only two in the country, located 750 miles from where John lives.

The super center runs the details of John’s health history and genetic makeup through a predictive model that will demonstrate how John is likely to absorb, metabolize, and excrete the new medicine, considering his medical history of a weakened heart.

Soon, John receives an email from the super center. It confirms that his profile fits the screening criteria and asks him to call the nursing staff to discuss his participation in the clinical trial.

Three weeks later, John visits a remote clinic where he is given a slow-release implant containing the new medicine; he is closely supervised on an in-patient basis for five days. All the evidence suggests that John is responding well. He is then injected with a saline solution containing thousands of micron-sized robots, which will track how he responds in the long term.

The trial investigator explains how data captured by these robots will be transmitted to a central database at the super center and electronically filtered, using intelligent algorithms and pre-programmed safety parameters, to detect any abnormalities. If John experiences an adverse reaction, the system will immediately notify the super center, which will then contact him to discuss the implications. John’s doctor will also be informed if he requires medical care.

John goes home, reassured by the knowledge that he is being monitored around the clock. He forgets about the steady stream of data his nano-monitors are transmitting, but he knows that the super center is analyzing and collating them with clinical data from other patients, using continuous feedback loops to refine its research.

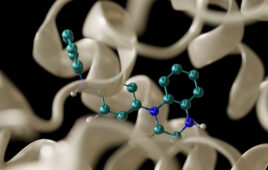

Welcome to the future of research and development, one in which science and technologies that are only emerging today will play a far greater role in drug research and development. As described in “Pharma 2020: Virtual R&D, Which path will you take?”, a new PricewaterhouseCoopers report, bioinformatics and systems biology will be key to better understanding the human body and pathophysiology of disease. And the evolution of “virtual man” will enable researchers to predict the effects of new drug candidates before they are tested in human beings.

Unraveling complex diseases

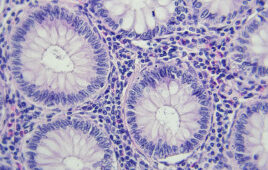

One of the greatest challenges to medicine in the 21st century will be complexity. The focus of drug research and development is shifting from diseases that are relatively easy to treat, to prevention and cure, which typically are more difficult. Biology is now positioned to resolve many of the problems created by greater complexity through advanced research technologies. The ability to manage biological complexity will allow drug makers and physicians to solve many of the most intractable diseases.

Today, when researchers start investigating biological targets, their information is usually based on animal studies. They may know relatively little about how targets are involved in the diseases they want to treat or understand how the disease progresses in humans.

Generally, it’s not until Phase 2 clinical trials that companies test whether modifying a particular target with a particular molecule is efficacious in treating a disease in humans. This helps to explain why just 11 percent of the molecules that enter pre-clinical development reach the market, and hence why costs per drug are so high, as reported in PAREXEL’s Pharmaceutical R&D Statistical Sourcebook, 2001.

What’s needed is a comprehensive understanding of how the human body works at the molecular level, together with a much better grasp of the pathophysiology of disease. This knowledge can then be used to uild predictive models and generate further knowledge. Bioinformatics experts need to create a complete mathematical model of the molecular and cellular components of the human body—a “virtual man”—which can be used to simulate the physiological effects of interacting with specific targets, identify which targets have a bearing on the course of a disease, and determine what sort of intervention is required (an agonist, antagonist, inverse agonist, opener, blocker, etc.).

At present, organizations are building models of different organs and cells, or creating three-dimensional images from the resulting data. But these models are only as good as the data on which they are based. While this knowledge is being generated, much is still unknown about many physiological processes.

Ultimately, the data also must be integrated into a single, validated model in order to predict the effects of modulating a biological target on the whole system. And, that model must be capable of reflecting common genetic and phenotypic variations. The computing power required to run such a model would be enormous.

It may not be realistic to think that complete virtual patients will be available before 2020. However, predictive biosimulation is already playing a growing role in the R&D process. And this trend is likely to continue. Over the next 15 years, virtual cells, organs, and animals could be widely employed in pharmaceutical research, reducing the need to experiment on living creatures.

More predictive research

Traditional informatics systems are constrained by the structure of the data they use and how they can represent those data. It is not intuitive. For example, if the same concept is called by different names (e.g., headache and migraine) in different sources, it cannot readily be connected. Conversely, where two concepts share the same name but are fundamentally different, they will be treated as if they are identical. As a result, it is very difficult to aggregate data from multiple sources and to make meaningful associations between them, without the application of intelligent reasoning.

By contrast, semantic technologies will allow scientists to connect disparate data sets, query the data using “natural language” and make correlations that would otherwise have been unobservable. This will make it easier to identify the links between a particular disease and the biological pathways it affects, or the links between a particular molecule and its impact on the human body.

Meanwhile, computer-aided molecule design will give researchers a much better starting point in the search for potent molecules and reduce the need to run high throughput screens to find hits (which is like looking for a needle in a haystack). It will still be necessary to test these molecules in in vitro and in vivo assays until complete models of the anatomical and physiological characteristics of the human body in a healthy and diseased state are available.

Accelerating development

As R&D moves closer to the virtual man, PricewaterhouseCoopers believes that it will be possible to “screen” candidates such as John Doe in a digital representation of the human body, which can be adjusted to reflect common genetic variations and disease traits, such as a weakened cardiovascular system. This will show whether a molecule interacts with any unwanted targets and produces any side effects, and in what circumstances it does so. Predictive analysis will then enable researchers to assess how the molecule is likely to be absorbed, distributed, metabolized, and excreted; what long-term side effects it might have; what free plasma concentration is needed to provide the optimal balance between efficacy and safety; and what formulation and dosing levels might work best.

The “birth” of virtual man is, of course, a Nirvana that is still many years off. In the near term, advances coming from research and the use of new technologies are beginning to impact and enable parts of the development process.

Increasingly, new therapies will not be conventional pharmacological agents capable of being tested in conventional ways. The development of clinical biomarkers and new technology platforms will likewisehave a profound impact on the way in which all new therapies are tested. When biomarkers for diagnosing and treating patients are more accurately and widely available, the industry will be able to stratify patients with different, but related conditions and test new medicines only in patients who suffer from a specific disease subtype. That will, in turn, allow it to reduce the number and size of the clinical studies required to prove efficacy. Using clinical biomarkers that are reliable surrogates for a longer-term endpoint—like survival—will also help to cut endpoint observation times.

Semantic technologies will play a major role in improving the development process as well as research. They will enable industry to link clinical trial data with epidemiological and early research data, identify any significant patterns, and use that information to modify the course of its studies without compromising their statistical validity. In addition, pervasive monitoring, including the use of nano-scale devices that can measure usage compliance and absorption rates, will allow Pharma to track patients on a real time basis wherever they are.

PricewaterhouseCoopers predicts that these scientific and technological advances will ultimately render the current model of development, with its four distinct phases of clinical testing, obsolete. At present, it is not until the end of Phase 2 that scientists have a reasonable grasp of the safety and efficacy of the molecules they are testing. Even then, that understanding may be fatally flawed. In an analysis by McKinsey,1 of 73 clinical drug candidates that failed in Phase 3 trials, 31 percent were pulled because they were unsafe and 50 percent because they were ineffective.

The development process will become much more iterative, with data on a molecule for one disease subtype fed back into the development of new molecules for other disease subtypes in the same cluster of related diseases. Information that is derived from developing a medicine for one variant of diabetes will be used to shape the development of medicines for other variants of diabetes.

These are not the only elements of the trial design and development process that will change. The current system of conducting trials at multiple sites is highly inefficient. By 2020, the current multi-site clinical trial process could be replaced by a system based on clinical super centers—one or two per country, perhaps—to recruit patients, manage trials, and collate trial data.

Furthermore, with common data standards, electronic medical records, and electronic data interchange, it will be possible to manage clinical trials long distance and almost completely electronically. This approach would accelerate trial recruitment and ensure that trials are managed more efficiently and consistently, and it will make trial data more transparent.

Modeling the future

If Pharma is to remain at the forefront of medical research and continue helping patients live longer, healthier lives, it must become much more innovative, as well as reduce the time and money it spends developing new therapies. Incremental improvements will no longer be enough; the industry will need to make a seismic shift to facilitate further progress in the treatment of disease.

New technologies can play a major role in helping Pharma move forward. With the use of emerging technologies, bioinformatics, systems biology, and ultimately the “virtual man”, the pharmaceutical industry’s research and development process could be shortened by two-thirds, success rates should dramatically increase and reduced clinical trial costs will potentially halve costs per drug dramatically.

However, technology is not the only answer. Many companies will have to make significant organizational and behavioral changes. They will have to decide whether they want to produce mass-market medicines or specialty therapies, and whether they want to outsource most of their research or keep it in-house. The choices they make will have a profound bearing on the business models and mix of skills they require.

Connectivity—technological, intellectual and social—will ultimately enable us to make sense of ourselves and the diseases from which we suffer.

References

1. Maria A. Gordian, Navjot Singh et al. Why drugs fall short in late-stage trials, The McKinsey Quarterly. November 2006. Accessed May 3, 2007.

About the Authors

Dr. Stephen Arlington consults extensively with company boards and senior management in the areas of strategy, R&D, and regulatory affairs. He is an active author of reports on the future direction and structure of the pharmaceutical industry.

Anthony Farino is an expert on pharmaceutical, biotech and medical products industries, covering components of the value chain including pharmaceutical sales and marketing, managed care operations, product development, and regulatory matters.

This article was published in Drug Discovery & Development magazine: Vol. 11, No. 10, October, 2008, pp. 16-22.

Filed Under: Genomics/Proteomics